What is generative AI, & why do many people like it?

Generative AI is getting a lot of attention these days, but what is it exactly? We’ve got the answers.

Generative AI means computer programs that make completely new things like words, pictures, videos, and more using the information they’ve learned. They make new stuff by thinking about what they’ve learned and guessing what comes next.

Generative AI’s job is to make new stuff, not like other types of AI that can do things like check data or steer a self-driving car.

What’s making generative AI a popular subject these days?

The term “generative AI” is making a lot of noise these days because of how much people like generative AI programs like ChatGPT and DALL-E from OpenAI. These tools use generative AI to create all sorts of things like code, essays, pictures, emails, and more in just seconds. This could change how people do things.

ChatGPT got really famous and got over a million users each week after it started. Many other big companies like Google, Microsoft’s Bing, and Anthropic are also joining the generative AI trend.

The excitement about generative AI is going to keep growing as more companies get into it and find new ways to use it in everyday stuff.

How does machine learning connect with generative AI?

Machine learning is like a part of AI that helps a computer guess things using data it learned from. For instance, DALL-E can guess what kind of picture to make when you tell it what you want.

Generative AI is a type of machine learning, but not all machine learning is generative AI. It’s a specific way computers learn and create stuff.

Which systems make use of generative AI?

Generative AI is used in AI programs that can create something entirely new. The big examples that got everyone excited about generative AI are ChatGPT and DALL-E.

Now, because people are so interested in generative AI, many companies have made their own generative AI models. Some of them are Google Bard, Bing Chat, Claude, PaLM 2, LLaMA, and more. There are even more tools out there!

What does generative AI art mean?

Generative AI art is made by AI models that learn from existing art. These models study billions of images from the internet to understand different art styles. When you tell them what you want through text, they use this knowledge to create new art.

DALL-E is a well-known AI art generator, but there are many others that are just as good or even better for various needs. Microsoft’s Bing Image Generator, using an advanced version called DALL-E 2, is considered as the top AI art generator right now.

What do text-based generative AI models learn from?

Text-based models like ChatGPT learn by reading huge amounts of text through a method called self-supervised learning. They use this information to guess things and give answers.

But there’s a worry with generative AI models, especially text ones. They are trained on data from the whole internet, which can have copyrighted stuff and info that people didn’t agree to share.

What do generative AI art’s consequences look like?

Generative AI art models are taught using billions of images from the internet. These images often come from specific artists and are then reimagined and reused by AI to create new pictures.

Even though the new picture isn’t the same, it can still have parts of the artist’s original work, without giving them credit. This means that AI can copy an artist’s unique style and make new pictures without the artist’s knowledge or permission. The argument about whether AI-made art is truly ‘new’ or even ‘art’ will probably go on for a long time.

What are the drawbacks of generative AI?

Generative AI models gather lots of stuff from the internet and use it to make guesses and give you answers based on what you ask. But these guesses aren’t always right, even if they sound like they could be.

Sometimes, these answers might have biases from the internet content they learned from, and it’s hard to tell if that’s happening. These issues are a big worry because they can lead to spreading wrong information with generative AI.

Generative AI models often can’t tell if what they make is right, and we often don’t know where the info comes from or how it’s used to make stuff.

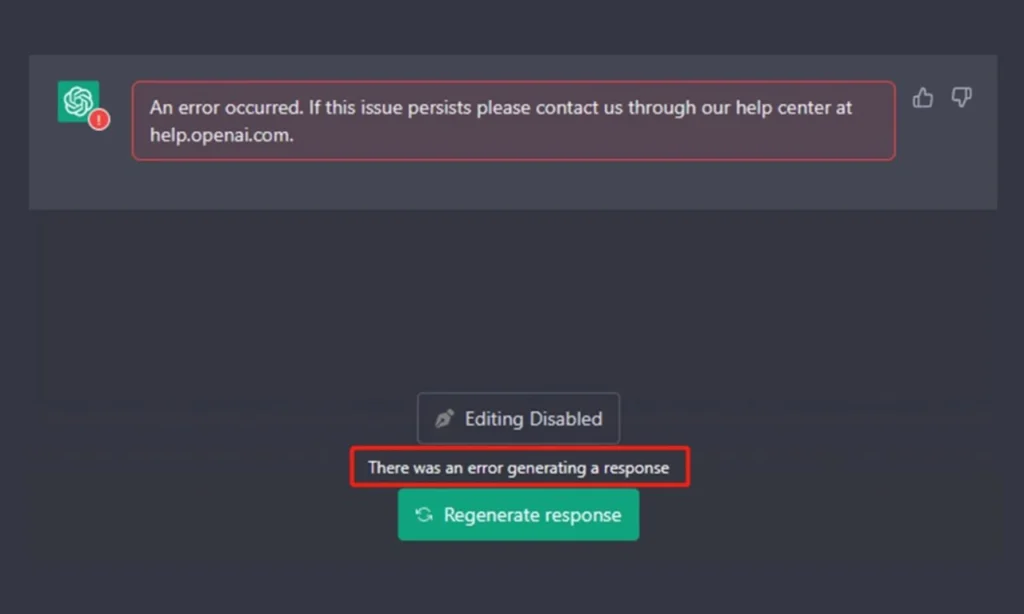

For example, chatbots can give wrong info or just make things up. While generative AI can be fun, it’s not a good idea to trust the info they give, especially in the short term.

Some generative AI models like Bing Chat or GPT-4 try to fix this by showing where they got their info and letting you check if it’s accurate.

Never miss any important news. Subscribe to our newsletter.

Related News

British Investor Who Predicted US Slump Warns of Next Crash

I’m a Death Doula: 4 Reasons I Believe Death Isn’t the End

Tech to Reverse Climate Change & Revive Extinct Species

AI Unlocks the Brain’s Intelligence Pathways

XPENG Unveils Iron Robot with 60 Human-like Joints

Can AI Outsmart Humanity?

11 ChatGPT Prompts to Boost Your Personal Brand

Keir Starmer Hints at Possible Tax Hikes on Asset Income

Navigating the Future of AI: Insights from Eric Schmidt